Since my tech is not cooperating, a quick Monday shot on

Major conference opens today in France “Bending the Arc of AI Development Toward Shared Progress” very much a beyond-doomer, lets build effort — you can follow live here

Meta moving to Texas, Stargate, more

DeepSeek reservations, China policy USAI policy

WHY IS THE SUMMIT SO IMPORTANT?

1. The AI Summit in Paris A. Importance and Global Significance ● International Convergence on AI Challenges: The summit brings together leaders from government, industry, academia, and civil society to discuss AI’s rapid evolution. In a world where AI is reshaping economies and societies, events like this serve as a key meeting point for aligning perspectives on ethics, regulation, and technological innovation. ● Bridging Transatlantic Perspectives: With Europe (and Paris in particular) emphasizing human-centric, ethical approaches to technology, the summit fosters dialogue between European policymakers and their American counterparts. This dialogue is critical as both regions shape standards that will influence global AI governance, trade, and security. ● Economic and Technological Leadership: For the United States—a global hub for AI innovation—the discussions provide insight into emerging international regulatory frameworks and best practices. Such insights help U.S. policymakers and industry leaders anticipate and respond to new market conditions, ensuring competitiveness while addressing societal concerns. B. Key Discussion Topics ● Ethical and Responsible AI: How to develop, deploy, and scale AI systems in ways that are transparent, fair, and aligned with human values. ● Regulation and Governance: The need for robust policies that balance innovation with safety and privacy, including data protection, bias mitigation, and accountability. ● Security and Safety Measures: Strategies for managing risks associated with increasingly autonomous systems, including cybersecurity challenges and potential misuse of AI technologies. ● Innovation and Economic Impact: How AI is transforming various sectors (healthcare, finance, transportation, etc.) and what this means for workforce development, competitiveness, and economic growth. C. Main Speakers and Participants While the exact roster can change from year to year, key speakers typically include: ● Government Officials and Policymakers: High-ranking representatives from France, the European Union, and sometimes U.S. officials engaged in digital or technological policy, discussing regulatory frameworks and international cooperation. ● Industry Leaders: Executives from major technology companies (for example, leaders from companies like Google, Microsoft, or emerging European AI firms) who share insights on commercial innovation and ethical business practices. ● Academic and Research Experts: Thought leaders from top universities and research institutions, providing evidence-based perspectives on AI’s potential and its associated risks. ● Ethicists and Social Scientists: Specialists who examine the societal impacts of AI and propose frameworks for its responsible use. D. Why It Matters for the United States ● Competitive Edge and Innovation: The U.S. is home to many of the world’s leading AI companies and research institutions. Engagement at global summits helps ensure that U.S. interests and approaches to innovation are both influential and adaptive to international norms. ● Policy and Regulatory Alignment: As debates over AI regulation intensify worldwide, American policymakers benefit from understanding European models and perspectives. This knowledge aids in creating balanced policies that protect consumers and maintain innovation. ● Security and Ethical Considerations: Transatlantic dialogue helps the United States address shared challenges—such as cybersecurity threats and ethical dilemmas—ensuring that domestic policies are informed by a broader, global context. 2. The International AI Safety Report A. Overview and Authorship ● Who Wrote It: The report is typically the product of an international consortium of AI experts, researchers, policymakers, and representatives from various institutions. Although specific authorship can vary with each edition, such reports are often associated with respected organizations or coalitions dedicated to AI safety (for example, groups like the Future of Life Institute, the Global Partnership on AI, or independent expert panels). ● What It Is: The International AI Safety Report is a comprehensive document that assesses the current landscape of AI development with a focus on safety, risk mitigation, and long-term governance. It synthesizes technical research, policy analysis, and case studies to provide a clear picture of both current challenges and future risks. B. Why It Matters ● Risk Identification and Mitigation: The report outlines potential safety risks associated with increasingly autonomous AI systems—from unintended biases in decision-making algorithms to larger-scale issues such as loss of control over advanced AI. This information is critical for framing policies that protect public welfare. ● Policy Guidance: By providing a set of recommendations, the report informs governments and regulatory bodies on how to structure oversight mechanisms, invest in safety research, and foster international cooperation to manage AI risks effectively. ● Global Benchmark for Best Practices: Its recommendations often serve as a benchmark for developing national and international standards in AI safety. For countries aiming to be leaders in safe AI development, the report offers actionable guidance that can shape future regulatory frameworks. C. Implications for the United States ● Strengthening Regulatory Frameworks: For the U.S., where innovation is rapid and market dynamics are complex, the report’s insights can help policymakers design or update regulations that ensure AI is developed responsibly without stifling innovation. ● National Security and Competitive Advantage: Understanding and mitigating risks associated with AI is not only a matter of public safety but also of national security. By incorporating the report’s recommendations, the United States can better protect its critical infrastructure and maintain a leadership role in setting global standards. ● Driving Public and Private Investment: The report underscores the importance of investing in AI safety research. This can guide both public funding and private sector investment in initiatives that mitigate risks, thus ensuring that technological progress benefits society while minimizing potential harms. In Summary ● The AI Summit in Paris is a pivotal gathering that unites international leaders to address the transformative impact of AI—from ethical considerations and regulatory challenges to economic opportunities. Its global focus, and particularly its transatlantic dialogue, is vital for shaping policies that affect both Europe and the United States. ● The International AI Safety Report plays a complementary role by providing a thorough analysis of AI risks and offering policy recommendations. For the United States, this report is a crucial resource for developing a robust, forward-looking approach to AI governance—ensuring that as technology evolves, safety and ethical standards keep pace. Together, these initiatives illustrate a growing global consensus on the need to balance innovation with responsibility—a challenge that is as relevant in Paris as it is in Washington, D.C., and across the world. Below is an expanded discussion that focuses on how the issues raised at the AI Summit in Paris and the International AI Safety Report have specific relevance for United States attorneys. These details illustrate practical examples and legal challenges that U.S. attorneys might face as AI technology becomes further integrated into society and the criminal justice system. 1. Relevance of the AI Summit in Paris for U.S. Attorneys A. Enhancing Awareness of Global Regulatory Trends ● International Regulatory Dialogue: The summit brings together policymakers, legal experts, and industry leaders to discuss emerging AI regulations and ethical standards. U.S. attorneys must stay informed about these global developments because many AI-driven cases—such as those involving cross-border data breaches or technology misuse—require an understanding of both domestic and international legal frameworks. For example, if a U.S.-based company is involved in an AI-related incident that affects European citizens, attorneys may need to navigate conflicting regulatory standards (like differences between U.S. privacy laws and the EU’s GDPR) during litigation or criminal investigations. ● Case Example: Consider a scenario where an AI system used by a multinational corporation causes discriminatory practices in employment or lending. If investigations reveal that the system’s bias contravenes anti-discrimination laws in both Europe and the U.S., U.S. attorneys would need to coordinate with international counterparts to understand and apply the relevant regulatory nuances. B. Addressing Emerging Criminal and Civil Challenges ● Investigating Algorithmic Misconduct: U.S. attorneys may encounter cases where AI-driven decisions—such as those made in predictive policing or automated sentencing algorithms—lead to wrongful arrests or unjust outcomes. Understanding the ethical and technical debates highlighted at the summit can guide attorneys in questioning the reliability of algorithmic evidence in court. For instance, if an algorithm contributed to a wrongful conviction, attorneys might explore whether its opacity or inherent biases violated due process or civil rights protections. ● Deepfakes and Digital Manipulation: As AI technology advances, deepfake videos or synthetic audio can be used to manipulate evidence or influence public opinion (e.g., during elections). The summit’s focus on security and safety measures helps attorneys understand the technical markers of manipulated media. In future cases, U.S. attorneys might rely on expert testimony and technical analysis referenced during these international discussions to challenge the admissibility or authenticity of such evidence. C. Facilitating Transatlantic Cooperation ● Cross-Border Legal Challenges: With AI technologies operating globally, many investigations require cooperation between U.S. law enforcement and their European counterparts. The summit fosters dialogue that can lead to shared protocols, best practices, and intelligence on AI misuse. For example, if an AI system is involved in cybercrimes spanning multiple countries, U.S. attorneys can leverage frameworks discussed at the summit to facilitate extradition, joint investigations, or information sharing. ● Example in Cybersecurity: A coordinated cyberattack might use AI to mimic legitimate user behavior, making it harder to trace. By following the security recommendations and cooperation strategies outlined at international forums like the Paris Summit, U.S. attorneys can better coordinate with agencies such as the FBI and international law enforcement bodies to build cases against perpetrators. 2. Implications of the International AI Safety Report for U.S. Attorneys A. Understanding AI Risks and Their Legal Ramifications ● Risk Identification and Evidence Integrity: The report lays out potential risks associated with AI systems—such as algorithmic bias, lack of transparency, and system vulnerabilities—that have direct legal implications. U.S. attorneys might use the report as a reference when arguing that the deployment of a flawed AI system contributed to harm. For example, if a financial institution’s AI-based fraud detection tool fails to flag suspicious activity due to a design flaw, leading to significant financial losses or wrongful prosecutions, attorneys may argue that negligence or recklessness was involved based on established best practices for AI safety. ● Forensic Analysis and Expert Testimony: The technical details in the report can assist attorneys in understanding the limitations and potential malfunctions of AI systems. In cases where digital evidence is derived from automated systems, attorneys might bring in technical experts to explain how the algorithms work (or fail) in a courtroom. The report’s case studies and data points help build a factual foundation to challenge the admissibility or interpretation of algorithm-generated evidence. B. Guiding the Development of Legal Strategies ● Regulatory Compliance and Liability: The report’s recommendations on auditing AI systems, implementing robust oversight, and ensuring transparency can inform legal strategies when holding companies or government agencies accountable. U.S. attorneys can draw upon these insights when building cases that involve corporate negligence or violations of newly established AI safety regulations. For instance, if an autonomous vehicle malfunctions leading to injuries or fatalities, the report’s guidelines on system testing and accountability could underpin arguments for product liability or regulatory non-compliance. ● Example in Financial Fraud: Suppose an AI algorithm is used to manipulate stock markets or execute fraudulent transactions. The report may identify risks related to inadequate system safeguards. U.S. attorneys could then use these findings to bolster a case against those responsible for deploying or failing to properly oversee the AI system, arguing that adherence to international safety recommendations would have prevented the misconduct. C. Addressing National Security and Cybercrime ● AI in Cyber Operations: As AI tools become more sophisticated, they may be used for launching cyberattacks or automating hacking processes. The International AI Safety Report discusses the risks associated with autonomous decision-making in high-stakes environments. U.S. attorneys involved in cybercrime cases must understand these nuances, including how AI might be used to obfuscate criminal activities. The report’s recommendations could serve as a framework for developing new legal standards to address AI-enhanced cyber threats. ● Example in a Hypothetical Cyberattack: Imagine an AI-driven botnet that orchestrates a large-scale cyberattack on critical infrastructure. In prosecuting such a case, U.S. attorneys would need to demonstrate not only that the attack occurred but also how AI contributed to the sophistication and scale of the breach. The technical guidelines and risk assessments outlined in the report could be critical in framing the discussion around liability and the need for stronger regulatory oversight. Conclusion: Why This Matters for U.S. Attorneys For U.S. attorneys, both the AI Summit in Paris and the International AI Safety Report provide more than just academic or technical insights—they offer practical guidance on the emerging challenges at the intersection of law, technology, and international policy. By staying informed about: ● Global Regulatory Trends: Attorneys can better anticipate and navigate transnational legal issues. ● Technical and Ethical Risks: Legal professionals can more effectively challenge or defend AI-generated evidence and ensure that justice is served when algorithmic failures cause harm. ● Best Practices for Oversight: U.S. attorneys gain a reference point for advocating stronger oversight, regulatory compliance, and accountability in cases involving advanced technologies. As AI continues to evolve, these discussions and reports will increasingly influence how cases are prosecuted, how evidence is evaluated, and how accountability is assigned. For U.S. attorneys, leveraging this knowledge is essential for upholding the law in a rapidly changing technological landscape and ensuring that both individual rights and national security are protected.

NEXT

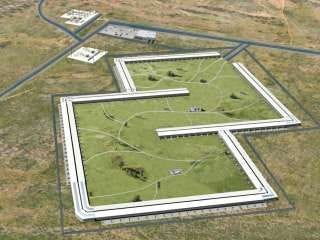

Pictures of Stargate One in Abilene, Texas (this is where my family comes from 100 years ago my grandfather left the farm for Wall Street) … this is build already by a group called Lancium.

Share this post