“IN 3D!”

We talk a lot in AI (re LLMs) about “hallucination.”

Of course this is the phenomenon we experience when AI makes an error or a mistake. It gives the ‘wrong’ output/information.

Have written here before about the scores of lawyers “burned” because they “ran with” a citation for a case which was completely synthetic — false. They called it “hallucination” yet just ‘who’ hallucinated?

It could have come from a John Grisham novel, an Ally MacBeal episode, or a moot court brief from a law school.

The “hoover vaccuum” nature of LLMs and the lack of indexing and labeling of ingredients they generally (do not) provide means the ‘LLM mind’ sees all information without context or detail. It relies on the person using it to tell it what they seek and what they do not seek.

This is a problem.

Another related problem which gets less notice is “user hallucination.” Maybe Ai Counsel is the first person highlighting this for you?

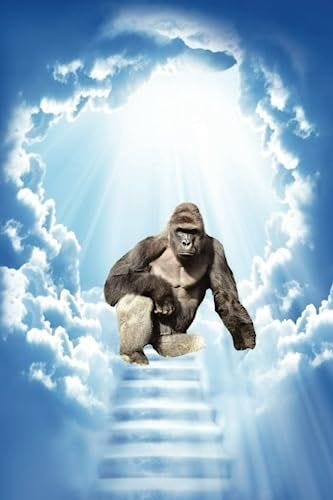

I call it the “Gorilla Effect.”

How much of the reasoning we see in LLMs is what I call the “Gorilla Effect”?

This is that HUMAN trait to import anthropomorphic traits on to animals, as we often see with people working with gorillas.

KoKo

Harambe (RIP!)

We impute to their behavior intellectual and sentient qualities which are not there.

We imagine they are there, and want them to be there, and so the human mind being naturally completive “supplies the missing piece.”

Yet it comes from our own imaginations.

Some are even doing with weather or plants or other machines — and of course we do it all day long with one another. These are separate topics.

Does the human mind “complete meaning” in the results (outputs) given by LLMs?

Effectively are we seeing what we want to see?

I say yes.

This question should probably be more deeply considered and discussed as a key component in the man-robot interface as we live-time design, engineer, and architect systems of AI. And what is a “system of AI”? Spoiler alert: its much MORE than an LLM.

An AI System is the entire lifecycle of the thoughtware of AI construction — who will use for what, no I mean precisely for what and whom, how will access, on what tools, in what places, with what linguistic or cultural or professional nomenclatural varieties or specialties, what risks to look out for?

I’m just saying we must look out for “human hallucination.” See and account for “The Gorilla Effect.”

No, the robots cannot “imagine us,” but we can imagine them and ourselves.

RIP Harambe.

An intriguing discussion, thanks for sharing. Besides appreciating it for the 'animal' cue (gorillas are an animal that have always fascinated me) it stimulated me and sent me back to the paper by Hannigan et al. (2024) in which the authors go beyond the risk of 'hallucinations' identifying in 'botshit' when a hallucination is incorporated into an insight or information presented by a human. I also dedicated an issue to this paper: https://theintelligentfriend.substack.com/p/beware-of-your-next-botshit